Congratulations to Yu Zhang, a doctoral student in Professor Duoqian Miao’s team, on having his article "Enhancing Text-to-Image Diffusion Transformer via Split-Text Conditioning" accepted by NeurIPS 2025

A paper by PhD student Zhang Yu, titled 'Enhancing Text-to-Image Diffusion Transformer via Split-Text Conditioning,' has been accepted by NeurIPS 2025 (The 39th Annual Conference on Neural Information Processing Systems), a top-tier A-class conference in the field of artificial intelligence. This research integrates Large Language Models with Diffusion Transformers to enhance the semantic quality of generated images.

近日,团队博士生张禹一篇论文“Enhancing Text-to-Image Diffusion Transformer via Split-Text Conditioning”被The Thirty-ninth Annual Conference on Neural Information Processing Systems(NeurIPS 2025)录用。NeurIPS,全称神经信息处理系统大会,是机器学习方向国际上公认的三大顶级会议之一,代表着当今人工智能研究的最高水平。同时,该会议在中国计算机学会国际学术会议排名中为人工智能领域的A类会议。

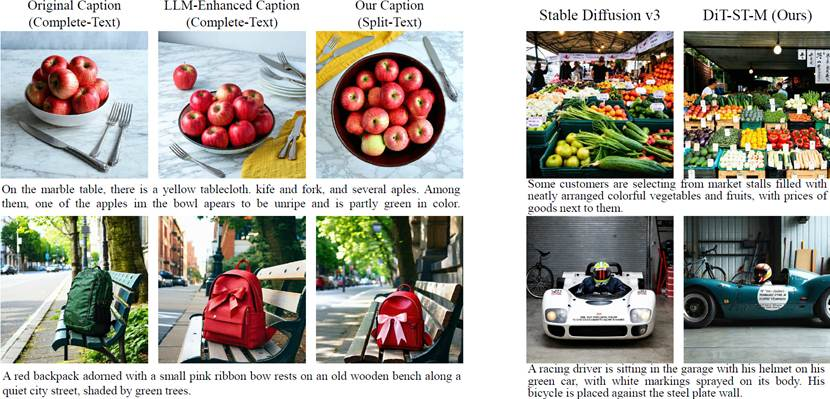

论文提出了 DiT-ST(Split-Text Conditioning)框架,用于提升Diffusion Transform生成图像的语意质量。现有 DiT 通常采用一次性完整文本(complete-text)作为条件输入,面对包含对象、关系和属性等多种语义原语的复杂描述时,易出现属性错配、语义纠缠和细节遗漏等问题,DiT-ST 借助大语言模型将完整文本解析为对象、关系和属性等语义基元,并构建层级化的 Split-Text Conditioning,以降低文本结构复杂度并显式区分不同语义粒度。在扩散推理过程中,DiT-ST 依据不同去噪阶段对语义基元的敏感性差异,采用多阶段渐进式条件注入避免细粒度信息的过早暴露与语义竞争干扰整体生成。实验结果表明,DiT-ST 有效缓解了 DiT 的完整文本理解缺陷,在 GenEval 和 COCO-5K 等基准上显著提升了语义一致性与属性绑定能力,验证了Split-Text Conditioning与多阶段渐进式条件注入在Diffusion Transformer 中的有效性。