Congratulations to our team's master student Zixuan Gong on the acceptance of the paper titled “MindTuner: Cross-Subject Visual Decoding with Visual Fingerprint and Semantic Correction” at AAAI 2025

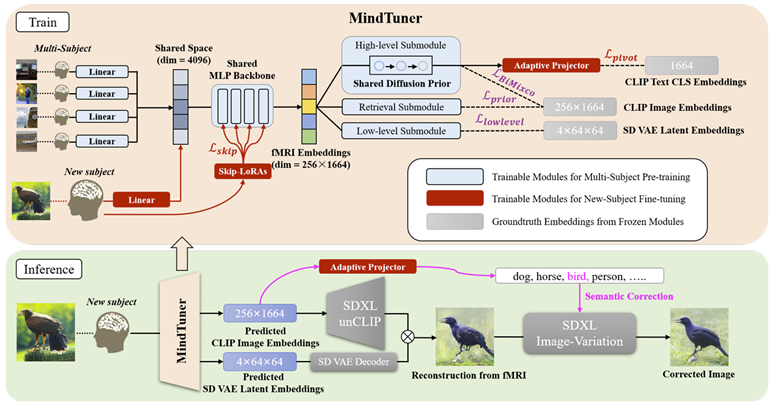

A paper titled " MindTuner: Cross-Subject Visual Decoding with Visual Fingerprint and Semantic Correction " by Zixuan Gong, a master's student from our team, has been accepted by AAAI 2025. AAAI conference is organized by the Association for the Advancement of Artificial Intelligence, is one of the top-tier academic conferences in the field of artificial intelligence. As a A-level conference recommended by the China Computer Federation, AAAI is an essential venue for academic exchange, allowing researchers to stay at the forefront of AI developments. In this work, we proposed MindTuner for cross-subject visual decoding, which achieves high-quality and rich semantic reconstructions using only 1 hour of fMRI training data benefiting from the phenomena of visual fingerprint in the human visual system and a novel fMRI-to-text alignment paradigm.

AAAI是人工智能领域的顶级学术会议之一,由美国人工智能协会(AAAI)主办。会议每年举行一次,旨在展示和交流人工智能各个领域的最新研究成果、技术进展和应用实践。会议的内容涵盖了人工智能的多个方面,包括机器学习、自然语言处理、计算机视觉、自动推理、智能系统、机器人学等。作为中国计算机学会(CCF)推荐的A类会议,AAAI是研究者进行学术交流和了解人工智能前沿动态的重要平台。

从大脑活动中解码自然视觉场景已经蓬勃发展,在单被试任务中进行了广泛的研究,但在跨被试任务中的研究较少。由于受试者之间存在深刻的个体差异和数据注释的稀缺,在跨被试任务中重建高质量图像是一个具有挑战性的问题。在这项工作中,本文提出了用于跨被试视觉解码的MindTuner,它利用人类视觉系统中的视觉指纹现象和一种新的fMRI到文本对齐范式,仅使用1小时的fMRI训练数据即可实现高质量和丰富的语义重建。首先,在7名受试者中预训练一个多受试者模型,并使用新受试者的稀缺数据对其进行微调,其中使用具有Skip-LoRA的LoRA来学习视觉指纹。然后,将图像模态作为中间枢轴模态来实现fMRI与文本的对齐,从而在fMRI与文本的检索性能上取得了显著成绩。此外,通过微调的语义信息,进一步优化了fMRI数据到图像重建的校正过程。定性和定量分析的结果表明,无论是使用1小时还是40小时的训练数据,MindTuner在自然场景数据集(NSD)上的表现均超越了当前最先进的跨学科视觉解码模型,展示了其强大的跨被试视觉解码能力和出色的性能。