Congratulations to our team's master student Guangyin Bao on the acceptance of the paper titled “Wills Aligner: Multi-Subject Collaborative Brain Visual Decoding” at AAAI 2025

A paper titled "Wills Aligner: Multi-Subject Collaborative Brain Visual Decoding" by Guangyin Bao, a master's student from our team, has been accepted by AAAI 2025. AAAI conference is organized by the Association for the Advancement of Artificial Intelligence, is one of the top-tier academic conferences in the field of artificial intelligence. As a A-level conference recommended by the China Computer Federation, AAAI is an essential venue for academic exchange, allowing researchers to stay at the forefront of AI developments. This accepted paper presents a novel pipeline for multi-subject visual decoding, offering insights into the collaborative analysis of brain data from multiple subjects.

AAAI是人工智能领域的顶级学术会议之一,由美国人工智能协会(AAAI)主办。会议每年举行一次,旨在展示和交流人工智能各个领域的最新研究成果、技术进展和应用实践。会议的内容涵盖了人工智能的多个方面,包括机器学习、自然语言处理、计算机视觉、自动推理、智能系统、机器人学等。作为中国计算机学会(CCF)推荐的A类会议,AAAI是研究者进行学术交流和了解人工智能前沿动态的重要平台。

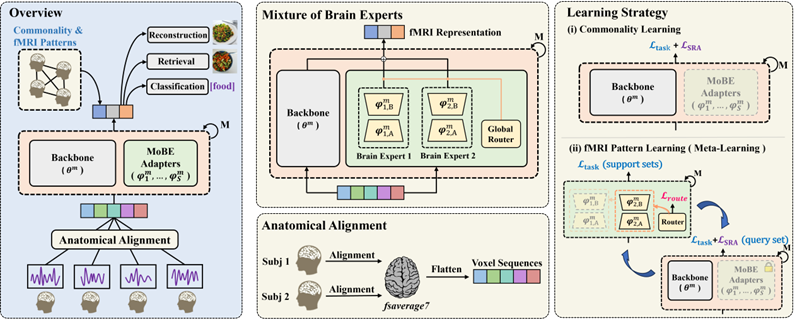

从人脑活动中解码视觉信息在最近的研究中取得了显著进展。然而,由于不同个体的大脑皮层划分和fMRI响应模式的差异,现有的方法需要针对每个受试者训练一个专属的深度学习模型。这种定制化的模型限制了大脑视觉解码在现实世界场景中的适用性。为了解决这一问题,本文提出了 Wills Aligner,一种多主体协作的大脑视觉解码方法。该方法首先从解剖学层面对不同受试者的fMRI数据进行对齐,从而构建共享的表征空间。随后,采用混合大脑专家模型和元学习策略,以适应多样化的fMRI响应模式。此外,Wills Aligner还借助视觉刺激的语义关系来学习受试者之间的共性,使每位受试者的视觉解码能够从其他受试者的数据中获益。本文在分类、跨模态检索和图像重建等多种视觉解码任务中对Wills Aligner进行了全面评估。实验结果表明,Wills Aligner的性能令人满意。